library(car)

library(dplyr)

library(knitr)

library(ggplot2)

library(gridExtra)

data("Davis")5 Simple Linear Models

5.1 Variances, Covariances, and Correlations

5.1.1 Variance

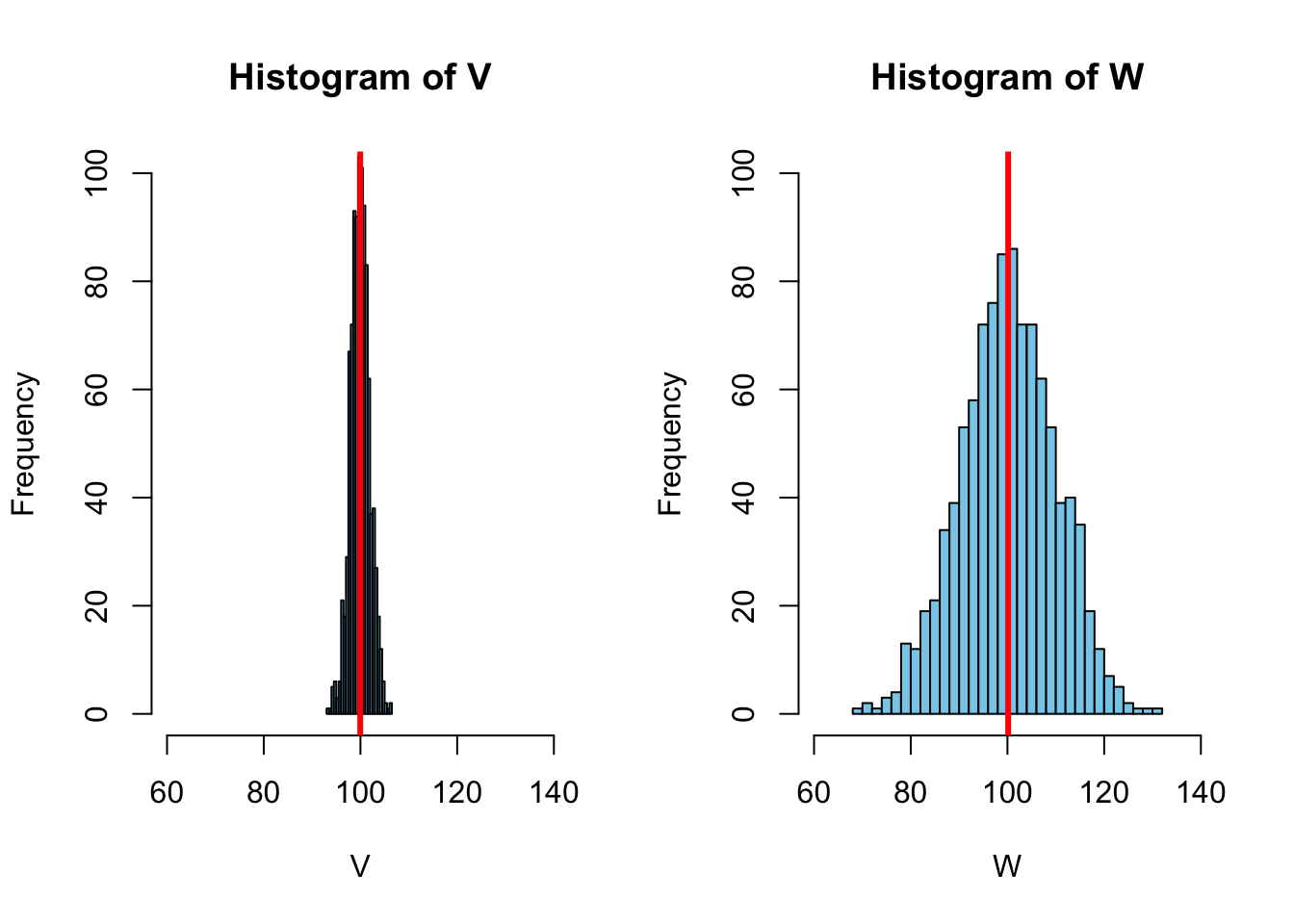

for ( \(n\) = 1,000, \(\bar{X}\) = 100)

The sample variance is defined as:

\[ s_x^2 = \frac{\sum{(X - \overline{X}})^2}{N - 1} = \frac{\sum{x^2}}{N - 1} \tag{2.1} \]

where \(X\) is the variable in question, \(\sum{\mathit{x}^2}\) is the sum of the squared deviations from the mean of \(X\), the latter symbolized as \(\overline{X}\), and \(N\) the sample size.

Defining \(X\) and computing the variance “by hand”:

X <- c(65, 69, 67.5, 75, 62.5, 68, 72, 67, 70, 72, 66.5, 68.5) # height

N <- length(X)

Xbar <- mean(X)

var_x <- sum((X - Xbar)^2)/(N - 1)

var_x[1] 11.35606or simply:

var(X)[1] 11.356065.1.2 Bivariate Relationship

5.1.3 Covariance

The covariance of two variables is defined as

\[ s_{xy} = \frac{\sum{(X - \overline{X})(Y - \overline{Y})}}{N - 1} = \frac{\sum{xy}}{N - 1}. \tag{2.3} \]

Note that we can rewrite the variance equation as

\[ s_{xx} = \frac{\sum{(X - \overline{X})(X - \overline{X})}}{N - 1} = \frac{\sum{xx}}{N - 1}, \]

suggesting that the variance of a variable can be considered its covariance with itself.

dat <- studht[ ,1:2]

names(dat) <- c("X", "Y")

dat <- within(dat, {

`$d_x$` = X - mean(X)

`$d_x^2$` = (X - mean(X))^2

`$d_y$` = Y - mean(Y)

`$d_y^2$` = (Y - mean(Y))^2

})

dat <- dat[ ,c("X", "$d_x$", "$d_x^2$", "Y", "$d_y$", "$d_y^2$")]

kable(dat, digits = 2)| X | \(d_x\) | \(d_x^2\) | Y | \(d_y\) | \(d_y^2\) |

|---|---|---|---|---|---|

| 64 | -3.83 | 14.69 | 65.0 | -3.58 | 12.84 |

| 68 | 0.17 | 0.03 | 69.0 | 0.42 | 0.17 |

| 66 | -1.83 | 3.36 | 67.5 | -1.08 | 1.17 |

| 75 | 7.17 | 51.36 | 75.0 | 6.42 | 41.17 |

| 61 | -6.83 | 46.69 | 62.5 | -6.08 | 37.01 |

| 68 | 0.17 | 0.03 | 68.0 | -0.58 | 0.34 |

| 72 | 4.17 | 17.36 | 72.0 | 3.42 | 11.67 |

| 66 | -1.83 | 3.36 | 67.0 | -1.58 | 2.51 |

| 69 | 1.17 | 1.36 | 70.0 | 1.42 | 2.01 |

| 70 | 2.17 | 4.69 | 72.0 | 3.42 | 11.67 |

| 66 | -1.83 | 3.36 | 66.5 | -2.08 | 4.34 |

| 69 | 1.17 | 1.36 | 68.5 | -0.08 | 0.01 |

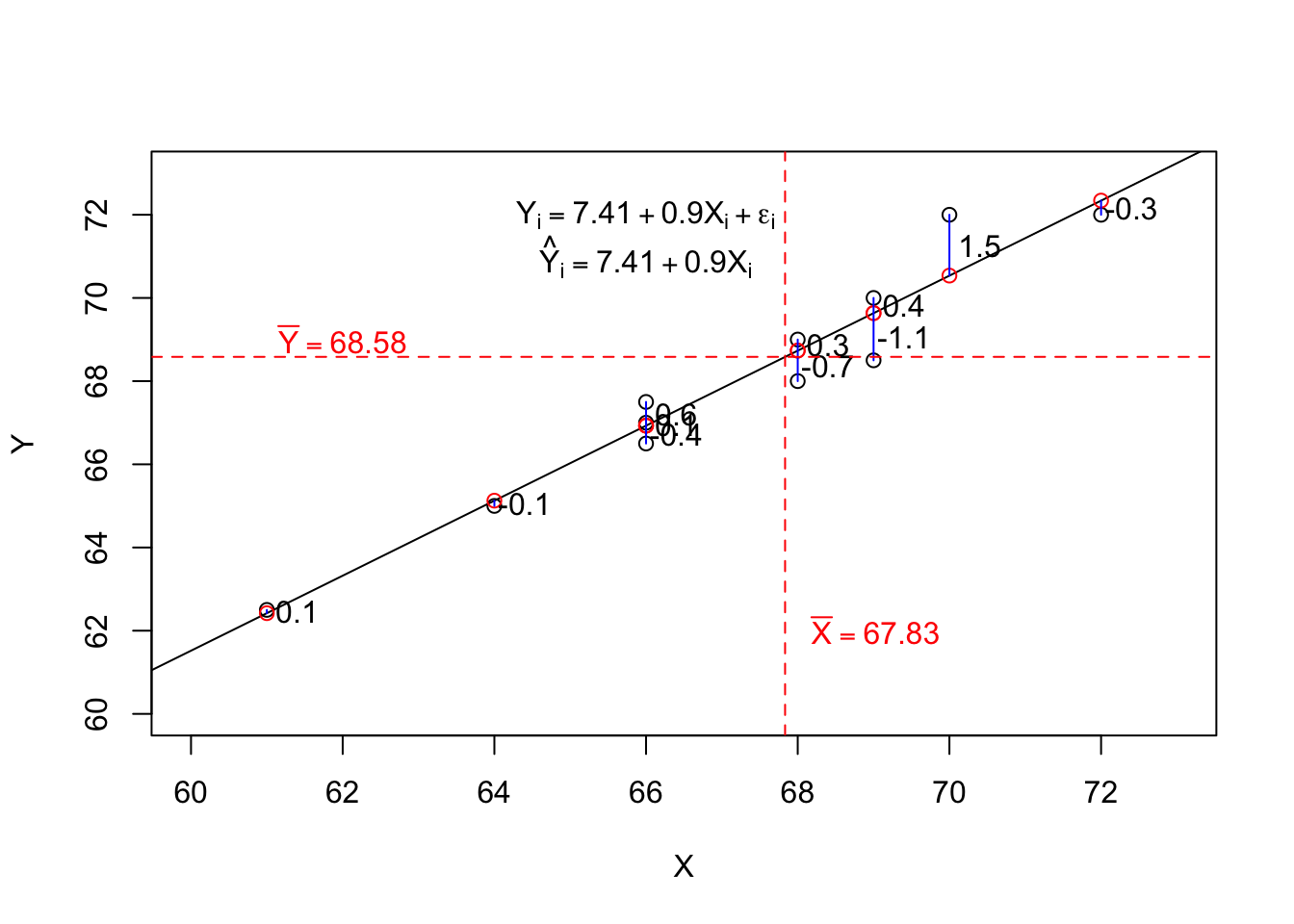

\(\sum{X} = 814\), \(\bar{X} = 67.83\),

\(\sum{Y} = 823\), \(\bar{Y} = 68.58\),

\(\sum{d_x^2} = 147.67\), \(\sum{d_y^2} = 124.92\)

\[ s_x^2 = \frac{\sum{x^2}}{N - 1} = \frac{147.67}{10 - 1} = 13.42 \]

\[ s_x = \sqrt{s_x^2} = 3.66 \]

\[ s_y^2 = \frac{\sum{y^2}}{N - 1} = \frac{124.92}{10 - 1} = 11.36 \]

\[ s_y = \sqrt{s_y^2} = 3.37 \]

| X | \(d_x\) | \(d_x^2\) | Y | \(d_y\) | \(d_y^2\) | \(d_xd_y\) |

|---|---|---|---|---|---|---|

| 64 | -3.83 | 14.69 | 65.0 | -3.58 | 12.84 | 13.74 |

| 68 | 0.17 | 0.03 | 69.0 | 0.42 | 0.17 | 0.07 |

| 66 | -1.83 | 3.36 | 67.5 | -1.08 | 1.17 | 1.99 |

| 75 | 7.17 | 51.36 | 75.0 | 6.42 | 41.17 | 45.99 |

| 61 | -6.83 | 46.69 | 62.5 | -6.08 | 37.01 | 41.57 |

| 68 | 0.17 | 0.03 | 68.0 | -0.58 | 0.34 | -0.10 |

| 72 | 4.17 | 17.36 | 72.0 | 3.42 | 11.67 | 14.24 |

| 66 | -1.83 | 3.36 | 67.0 | -1.58 | 2.51 | 2.90 |

| 69 | 1.17 | 1.36 | 70.0 | 1.42 | 2.01 | 1.65 |

| 70 | 2.17 | 4.69 | 72.0 | 3.42 | 11.67 | 7.40 |

| 66 | -1.83 | 3.36 | 66.5 | -2.08 | 4.34 | 3.82 |

| 69 | 1.17 | 1.36 | 68.5 | -0.08 | 0.01 | -0.10 |

\(\sum{X} = 814\), \(\bar{X} = 67.83\),

\(\sum{Y} = 823\), \(\bar{Y} = 68.58\),

\(\sum{d_xd_y} = 133.17\)

\[ s_{xy} = \frac{\sum{(X - \overline{X})(Y - \overline{Y})}}{N - 1} = \]

\[ \frac{\sum{xy}}{N - 1} = \frac{133.17}{9} =12.11. \]

5.1.4 Correlation

\[r_{XY} = \frac{\text{Cov}(XY)}{s_{X}s_{Y}} = \frac{12.11}{3.66 \times 3.37} = \frac{12.11}{12.35} = 0.98.\]

5.2 Conceptual Demonstration of Simple Regression

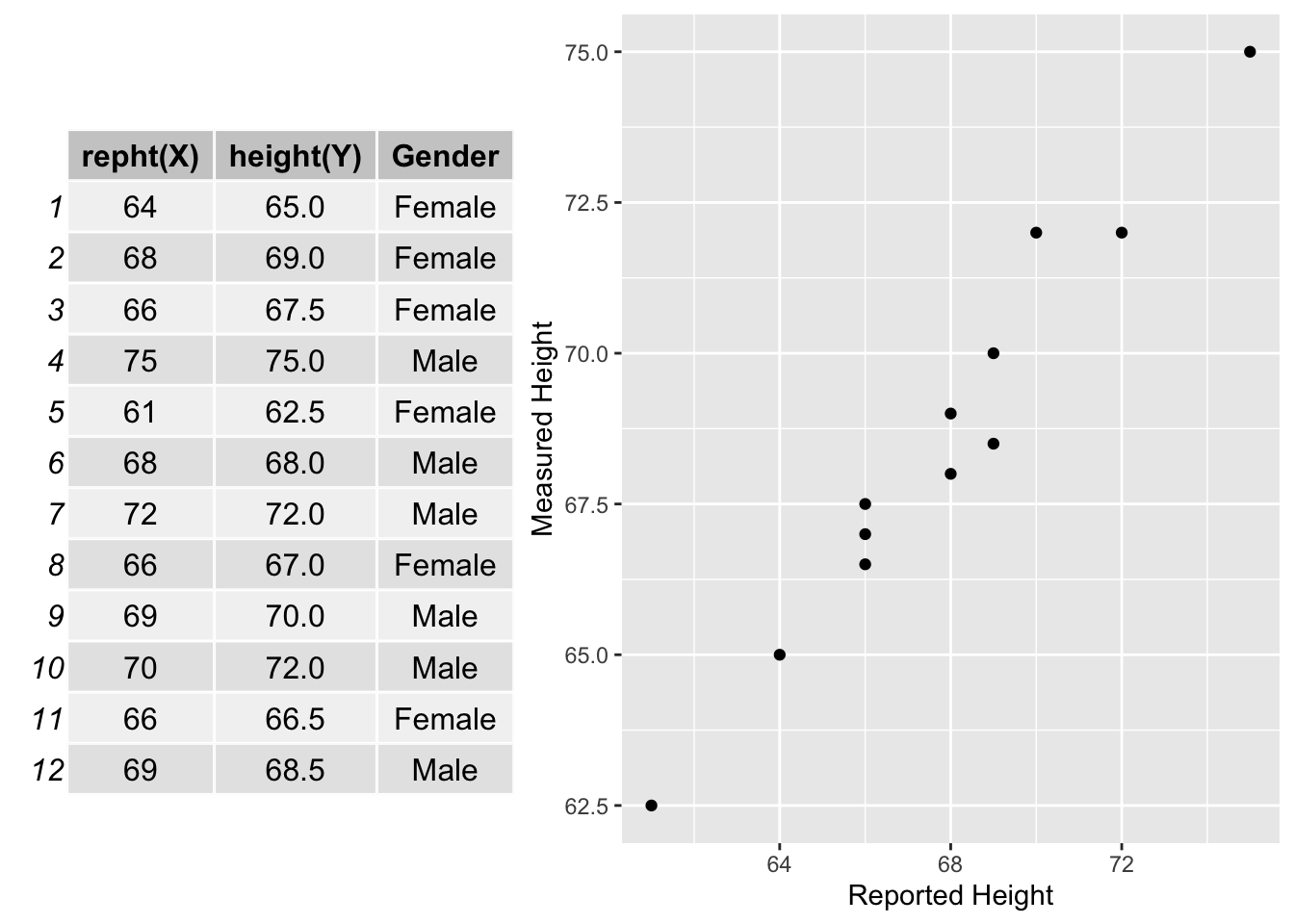

Let’s say we were about to conduct a large study and needed to know the participants heights. But it is very expensive to directly measure each participants height. We could just ask participants to report their height. So, we want to know how accurate people are at reporting their height. To evaluate that we could ask a random sample of people from the study population to report their height and then obtain direct measures of their height and see how strongly those are related. We want to see how well reported height predicts measured height.

Below are statistical models of the relationship between measured height (\(Y\)) and reported height (\(X\)).

Population Model:

\[Y_i = \beta_0 + \beta_1 X_i + \varepsilon_i\]

\[ \hat{Y_i} = \beta_0 + \beta_1 X_i\]

Sample Model:

\[Y_i = b_0 + b_1 X_i + e_i\] \[\hat{Y_i} = b_0 + b_1 X_i\] Below is a data set of graduate students in a research methods class, were I asked them to report their height (\(X\)) and then we measured their height (\(Y\)). This data set is much smaller than we would need to adequately answer our research question, but we will use it to demonstrate some key principles of simple regression.

df <- dat[ ,c("X", "Y")]

kable(df)| X | Y |

|---|---|

| 64 | 65.0 |

| 68 | 69.0 |

| 66 | 67.5 |

| 75 | 75.0 |

| 61 | 62.5 |

| 68 | 68.0 |

| 72 | 72.0 |

| 66 | 67.0 |

| 69 | 70.0 |

| 70 | 72.0 |

| 66 | 66.5 |

| 69 | 68.5 |

\[ \text{Cor}_{XY} = 0.98 \]

cor(df$X, df$Y)[1] 0.98049215.2.0.1 Data Summary

kable(psych::describe(df, fast = TRUE), digits = 2)| vars | n | mean | sd | median | min | max | range | skew | kurtosis | se | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| X | 1 | 12 | 67.83 | 3.66 | 68.00 | 61.0 | 75 | 14.0 | 0.10 | -0.51 | 1.06 |

| Y | 2 | 12 | 68.58 | 3.37 | 68.25 | 62.5 | 75 | 12.5 | 0.13 | -0.72 | 0.97 |

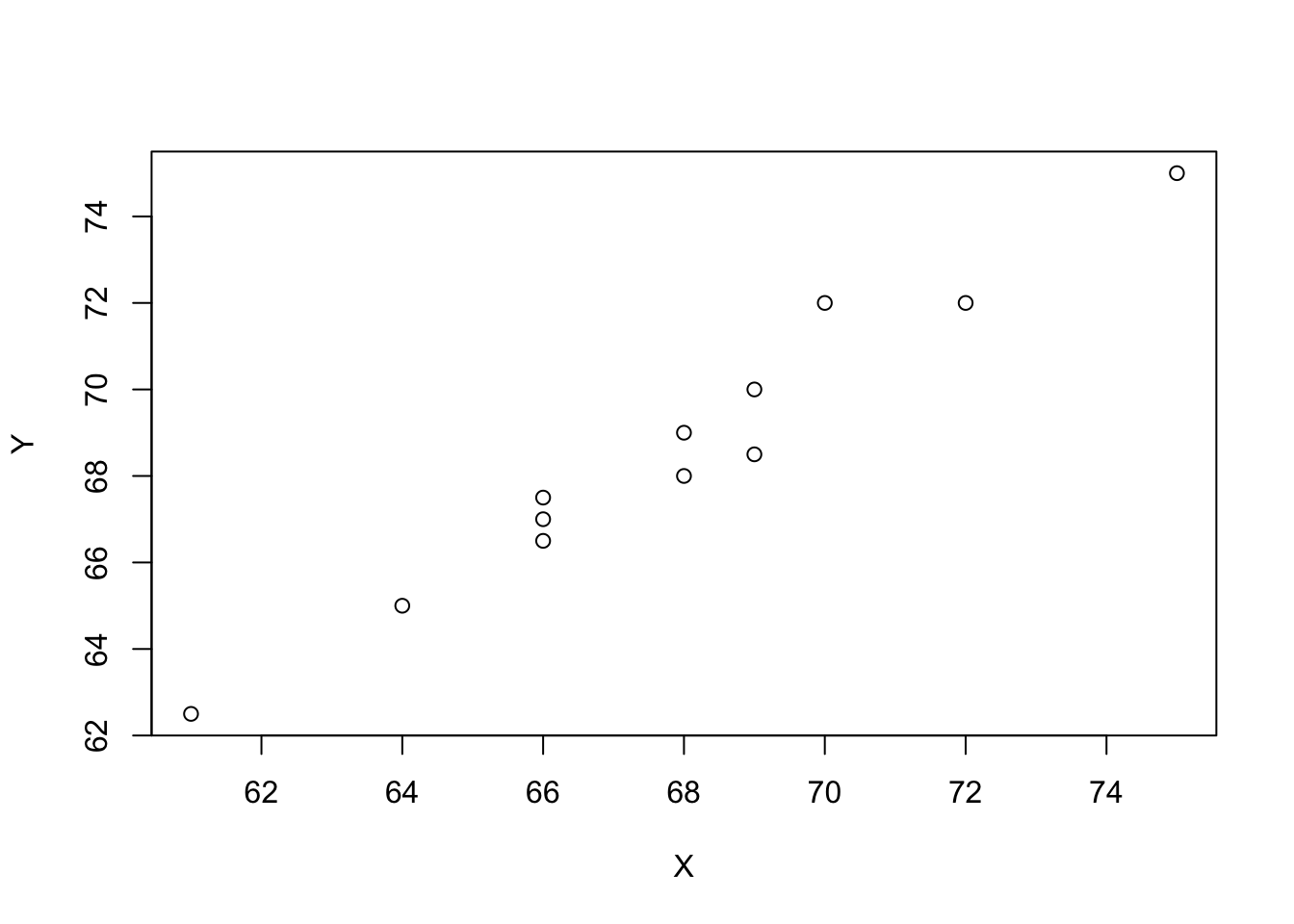

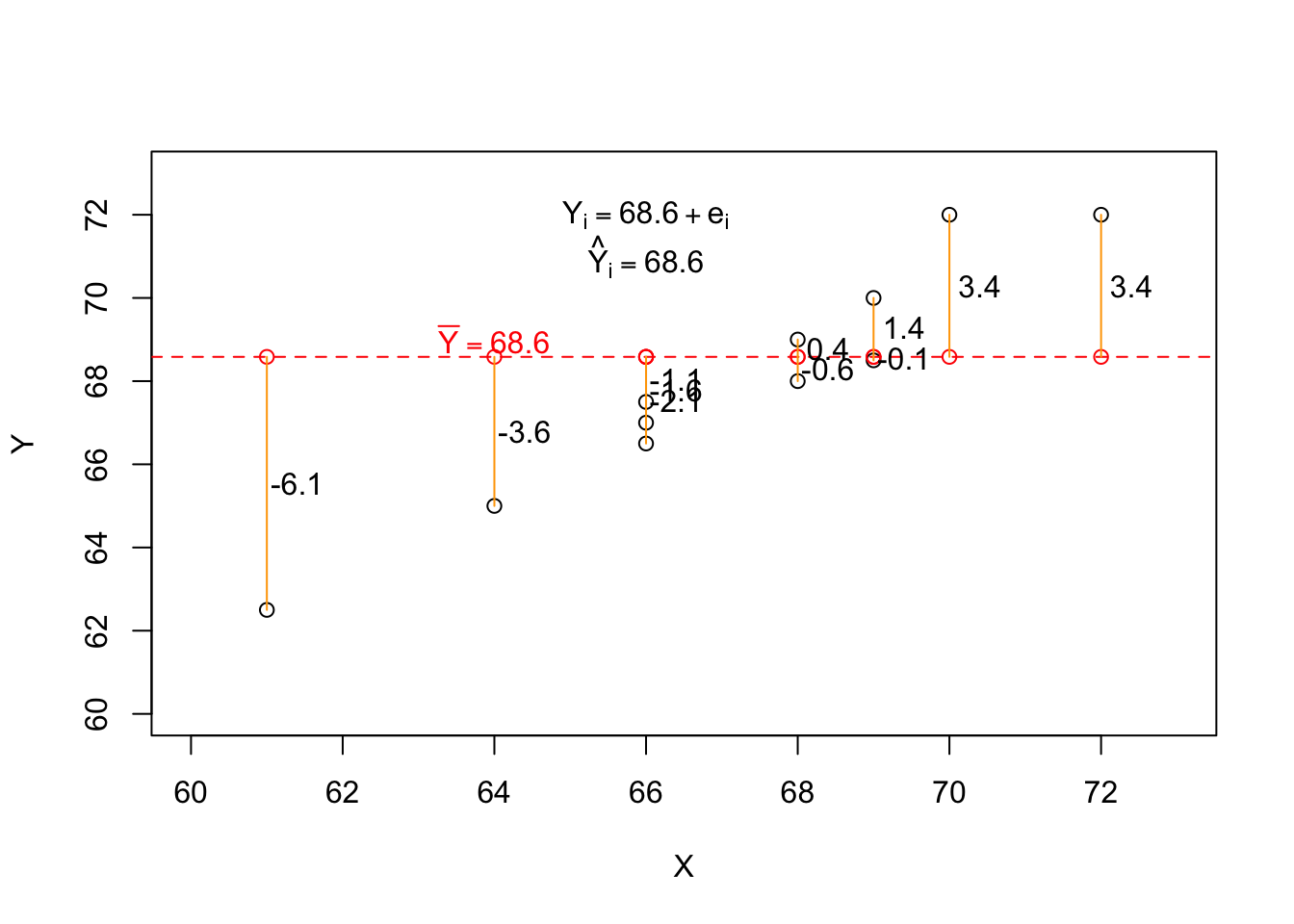

5.2.1 Graphical Demonstration of Simple Regression

plot(Y ~ X, data = df)

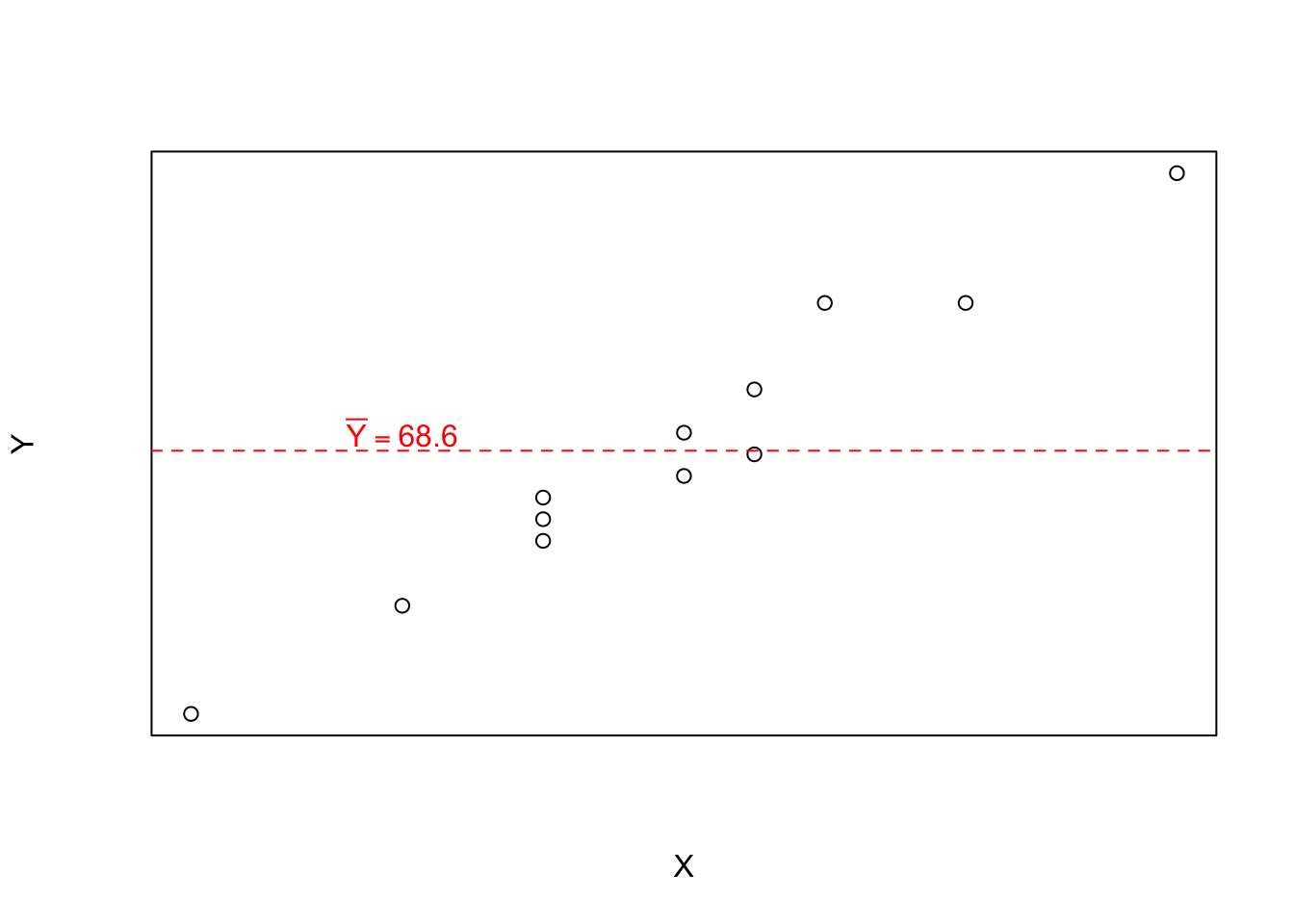

Ybar <- mean(df$Y)

Ybar[1] 68.58333# Empty Model

emptyModel <- lm(Y ~ 1, data = df)

coef(emptyModel)(Intercept)

68.58333 # Predicted value

predict(emptyModel) 1 2 3 4 5 6 7 8

68.58333 68.58333 68.58333 68.58333 68.58333 68.58333 68.58333 68.58333

9 10 11 12

68.58333 68.58333 68.58333 68.58333

df$d <- df$Y - mean(df$Y)| X | Y | d |

|---|---|---|

| 64 | 65.0 | -3.6 |

| 68 | 69.0 | 0.4 |

| 66 | 67.5 | -1.1 |

| 75 | 75.0 | 6.4 |

| 61 | 62.5 | -6.1 |

| 68 | 68.0 | -0.6 |

| 72 | 72.0 | 3.4 |

| 66 | 67.0 | -1.6 |

| 69 | 70.0 | 1.4 |

| 70 | 72.0 | 3.4 |

| 66 | 66.5 | -2.1 |

| 69 | 68.5 | -0.1 |

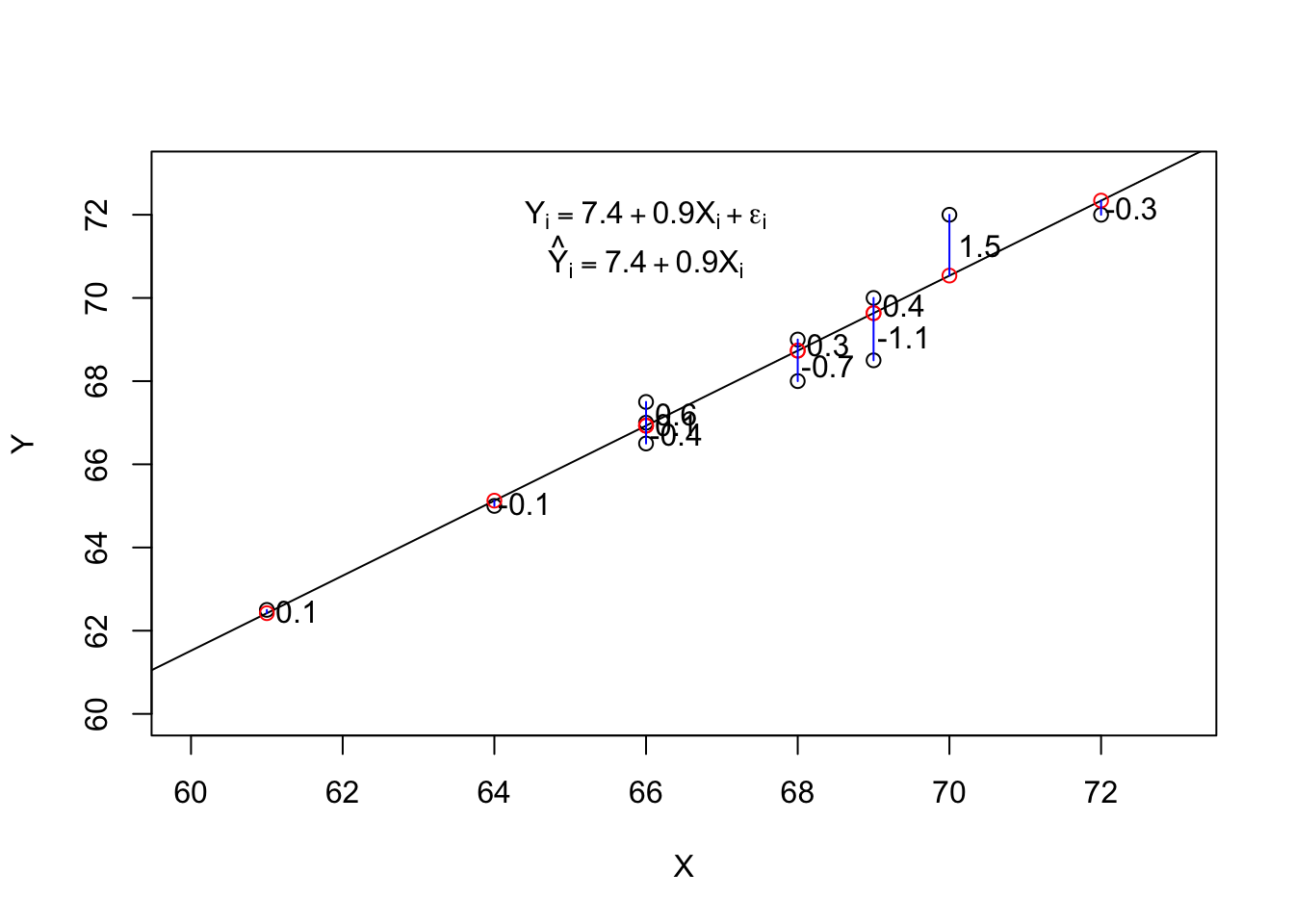

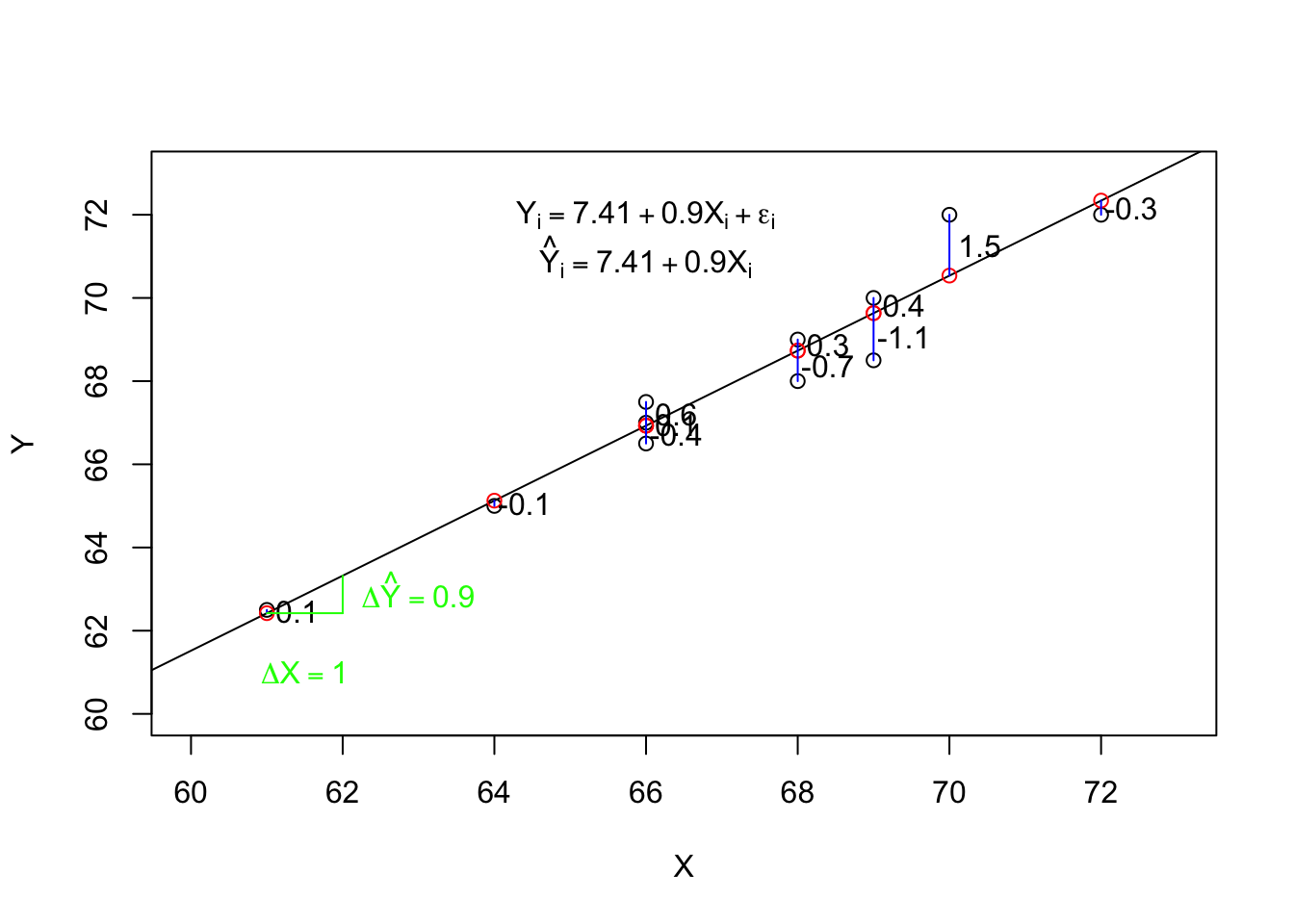

simpleReg <- lm(Y ~ X, data = df)

print(coef(simpleReg), digits = 2)(Intercept) X

7.4 0.9 df$resid <- resid(simpleReg)| X | Y | d | resid |

|---|---|---|---|

| 64 | 65.0 | -3.6 | -0.1 |

| 68 | 69.0 | 0.4 | 0.3 |

| 66 | 67.5 | -1.1 | 0.6 |

| 75 | 75.0 | 6.4 | 0.0 |

| 61 | 62.5 | -6.1 | 0.1 |

| 68 | 68.0 | -0.6 | -0.7 |

| 72 | 72.0 | 3.4 | -0.3 |

| 66 | 67.0 | -1.6 | 0.1 |

| 69 | 70.0 | 1.4 | 0.4 |

| 70 | 72.0 | 3.4 | 1.5 |

| 66 | 66.5 | -2.1 | -0.4 |

| 69 | 68.5 | -0.1 | -1.1 |

5.2.2 Interpreting Output from Simple Regression

We need to be able to interpret the coefficients, and determine their implications for our research question. We can look at a summary of the results of the simple regression in R as follows:

summary(mod)

Call:

lm(formula = Y ~ X)

Residuals:

Min 1Q Median 3Q Max

-1.13544 -0.36315 0.01185 0.29091 1.46275

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 7.41084 3.88315 1.908 0.0854 .

X 0.90181 0.05717 15.774 2.15e-08 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.6947 on 10 degrees of freedom

Multiple R-squared: 0.9614, Adjusted R-squared: 0.9575

F-statistic: 248.8 on 1 and 10 DF, p-value: 2.153e-08There is a lot of information contained in this output. First, note that the multiple R-squared is .96, which is an estimate of the proportion of measured height (\(Y\)) that can be explained by reported height (\(X\)). We can convert this proportion into a percentage by multiplying it by 100, and state that reported height explains about 96% of the variance in measured height.

Next, we can look at the intercept under the Coefficients: heading of the output. This value is 7.4 (rounding to 1 decimal place). What does this tell us? The intercept is technically the predicted value of the outcome (\(Y\)) when the predictor is zero. I would interpret this to mean that, according to this model, someone who reported their height as zero, would be predicted to have a measured height of a little less than 7 and 1/2 inches. This value is not very meaningful because no graduate students reported a zero height. The intercept is outside the range of the observed data (and theoretical population). Later we will learn how to make the intercept more meaningful. Often, though, the intercept is not the important coefficient. The slope is often what we want to learn about. For this simple regression, the estimated slope is about 0.9. Technically, the slope is the expected change in the outcome for a one unit change in the predictor. For this example, that suggests that, according to this simple regression model, two graduate students who reported their heights as being different by one inch, would be predicted to differ in actual height by about 0.9 inches.

5.3 Real Data Example

#install.packages("NHANES)

library(NHANES)

library(effectsize)

data(NHANES)Next we will look at the variables available in the NHANES dataframe, which is a available in the NHANES package in R. To illustrate how we can make statistical inferences, we can assume we want to know if adult height differs between women and men. Admittedly, this is a ver trivial goal of research, but it will help us to review the topic of statistical inference. To do this we will consider three scenarios.

First, we will pretend that we have no idea about what to expect between variables in this data and are engaging in an exploratory study which includes looking for relations between height and gender.

Second, we will pretend that we suspect that there is a relation between height and gender, possibly among other relations, and we want to know if heights are different between the populations of adult women and men. This is a rough confirmatory study.

Third, we will pretend that a primary goal of our study is to test the hypothesis that men are taller than women. We have a compelling theoretical model that suggest this relation, and we decide to test this hypothesis before we collect or at least before we look at our data. This is a strict confirmatory study.

names(NHANES) [1] "ID" "SurveyYr" "Gender" "Age"

[5] "AgeDecade" "AgeMonths" "Race1" "Race3"

[9] "Education" "MaritalStatus" "HHIncome" "HHIncomeMid"

[13] "Poverty" "HomeRooms" "HomeOwn" "Work"

[17] "Weight" "Length" "HeadCirc" "Height"

[21] "BMI" "BMICatUnder20yrs" "BMI_WHO" "Pulse"

[25] "BPSysAve" "BPDiaAve" "BPSys1" "BPDia1"

[29] "BPSys2" "BPDia2" "BPSys3" "BPDia3"

[33] "Testosterone" "DirectChol" "TotChol" "UrineVol1"

[37] "UrineFlow1" "UrineVol2" "UrineFlow2" "Diabetes"

[41] "DiabetesAge" "HealthGen" "DaysPhysHlthBad" "DaysMentHlthBad"

[45] "LittleInterest" "Depressed" "nPregnancies" "nBabies"

[49] "Age1stBaby" "SleepHrsNight" "SleepTrouble" "PhysActive"

[53] "PhysActiveDays" "TVHrsDay" "CompHrsDay" "TVHrsDayChild"

[57] "CompHrsDayChild" "Alcohol12PlusYr" "AlcoholDay" "AlcoholYear"

[61] "SmokeNow" "Smoke100" "Smoke100n" "SmokeAge"

[65] "Marijuana" "AgeFirstMarij" "RegularMarij" "AgeRegMarij"

[69] "HardDrugs" "SexEver" "SexAge" "SexNumPartnLife"

[73] "SexNumPartYear" "SameSex" "SexOrientation" "PregnantNow" The names are helpful, but we will want to know more about each variable. Because this is a data set from an R package, you can learn more about it using R’s help system as follows:

?NHANESYou should run the above command in RStudio and take a few minutes to read through the resulting help file, which will give you an idea of what is contained in the data, an what each variables captures.

One thing you might have noticed reading through the data description is that height was measured in centimeters (cm). Below I create a variable I call Height_in that converts from centimeters to inches.

NHANES$Height_in <- NHANES$Height/2.54Let’s look at the distribution of heights using this real data.

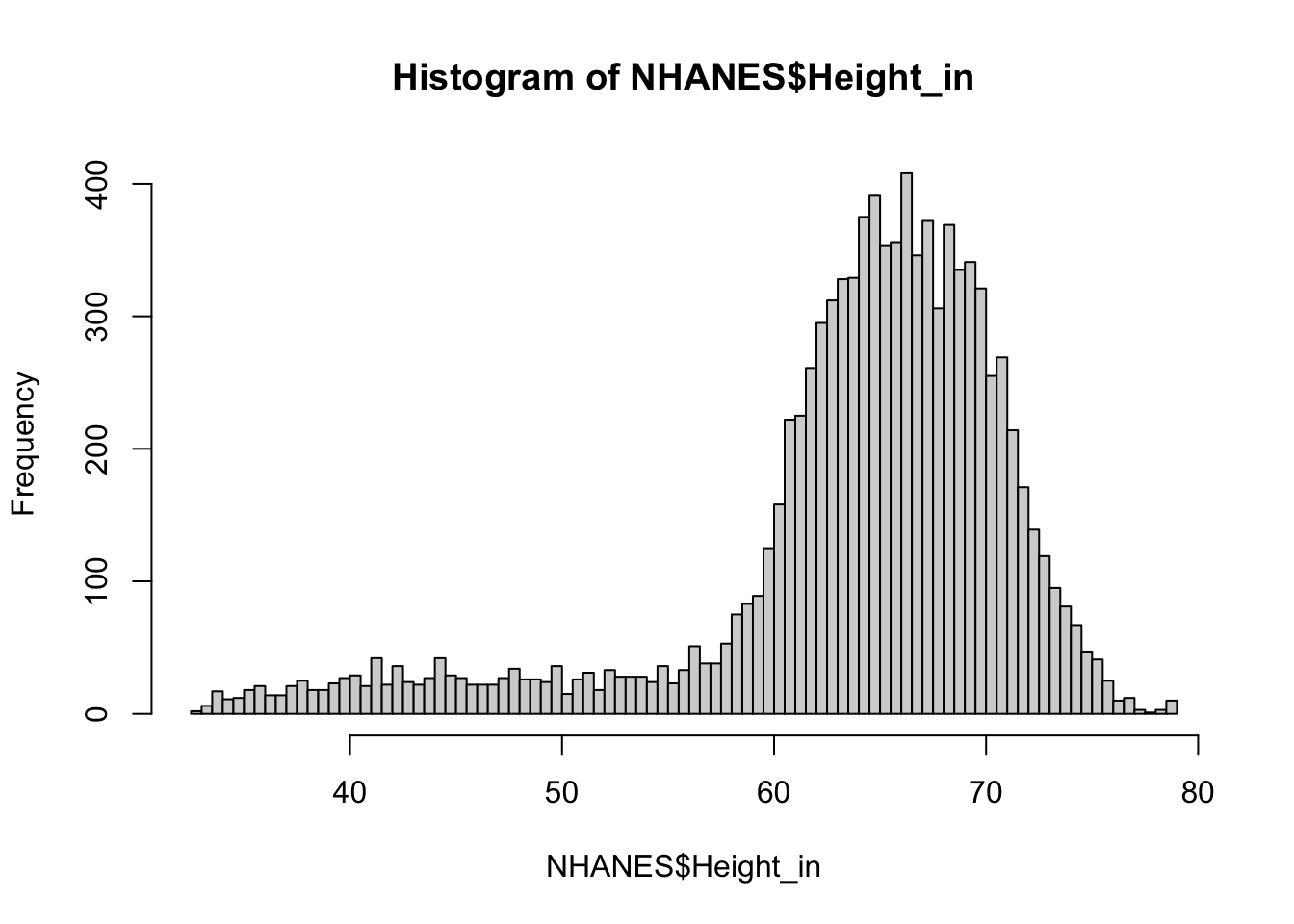

hist(NHANES$Height_in, breaks = "fd")

The data do not look normally distributed. instead, there is a long tail on the left side of the distribution. What do you think is going on here? You may want to read back over the data description. To understand this, take a look at the distribution of the age variable.

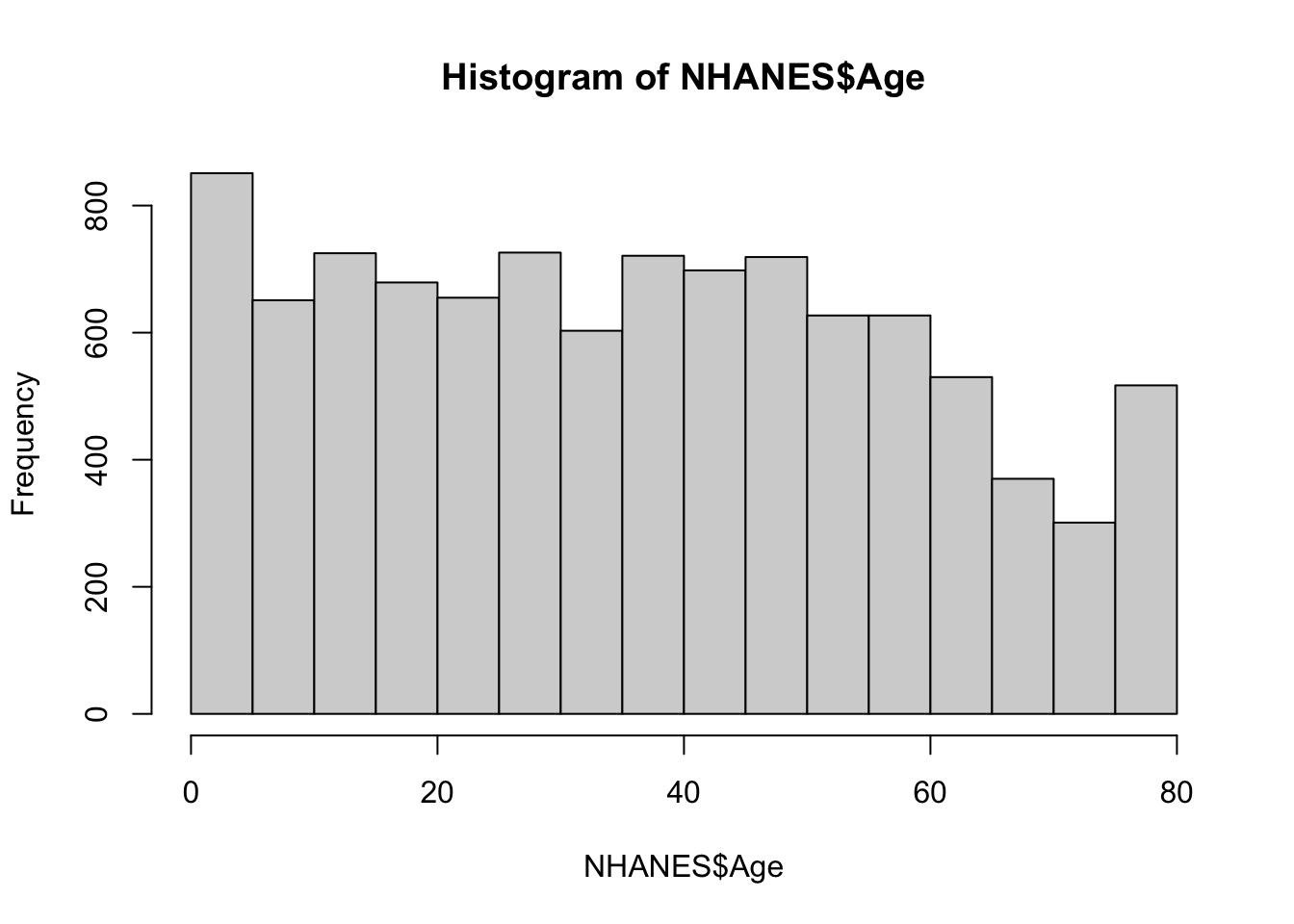

hist(NHANES$Age)

The data contain information on people from birth well into adulthood. Maybe the long left tail of the height distibution is a result of this age distribution. To explore this we can plot height against age.

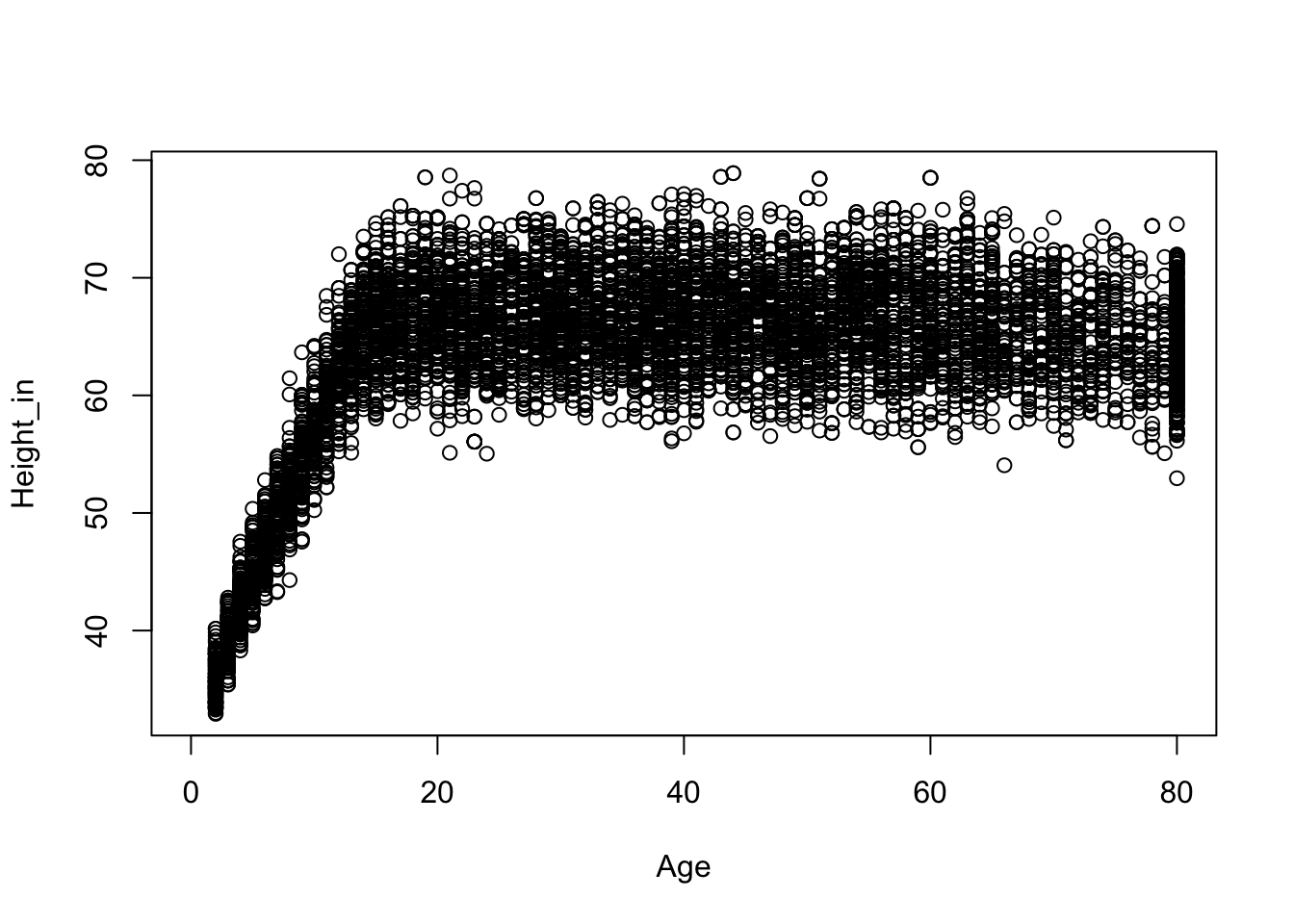

plot(Height_in ~ Age, NHANES)

This plot seems consistent with our hunch. From birth to the late teens we see a relation between height and age. For participants older than 18 or so, we don’t see a relation between height and age. Because we are interested in the height of adults we subset the data to only include those of the age of 18.

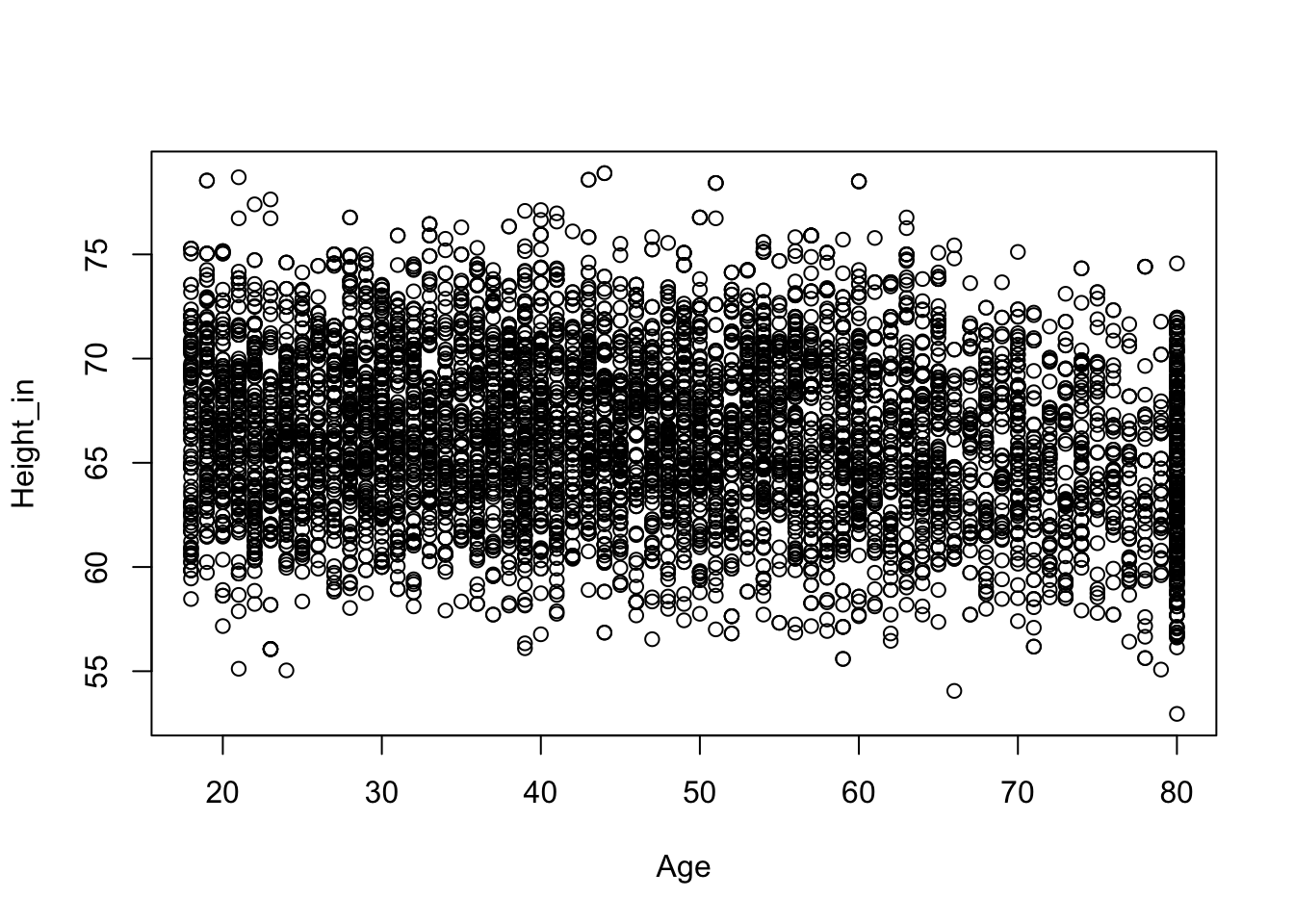

nhanesAdult <- NHANES[NHANES$Age >= 18, ]plot(Height_in ~ Age, nhanesAdult)

We can now look at the distribution of heights among adults.

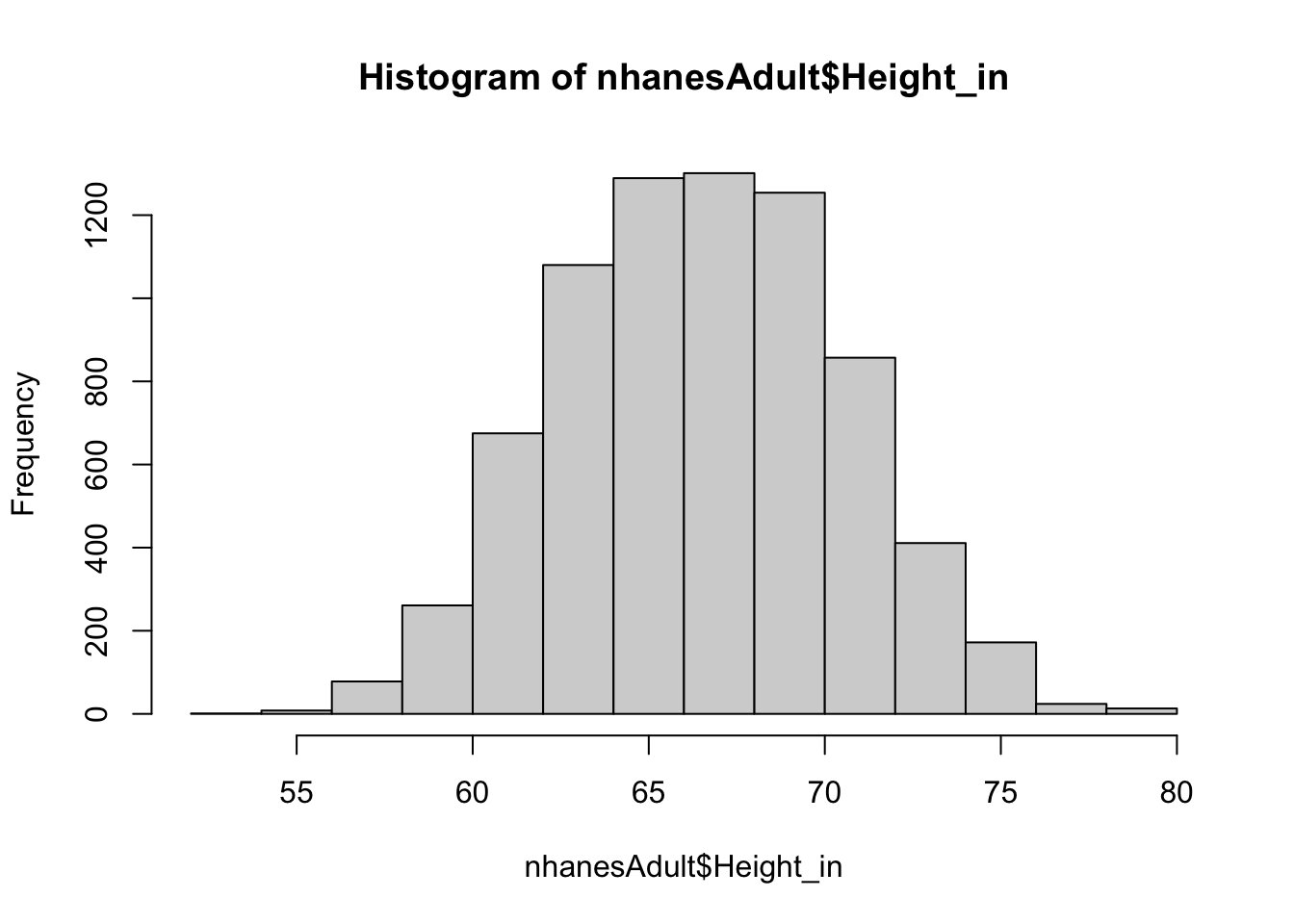

hist(nhanesAdult$Height_in)

This looks more normally distributed, which is what we would expect from a random sample of adults.

We might expect there to be differences in the average heights between males and females. To explore this we can use a boxplot.

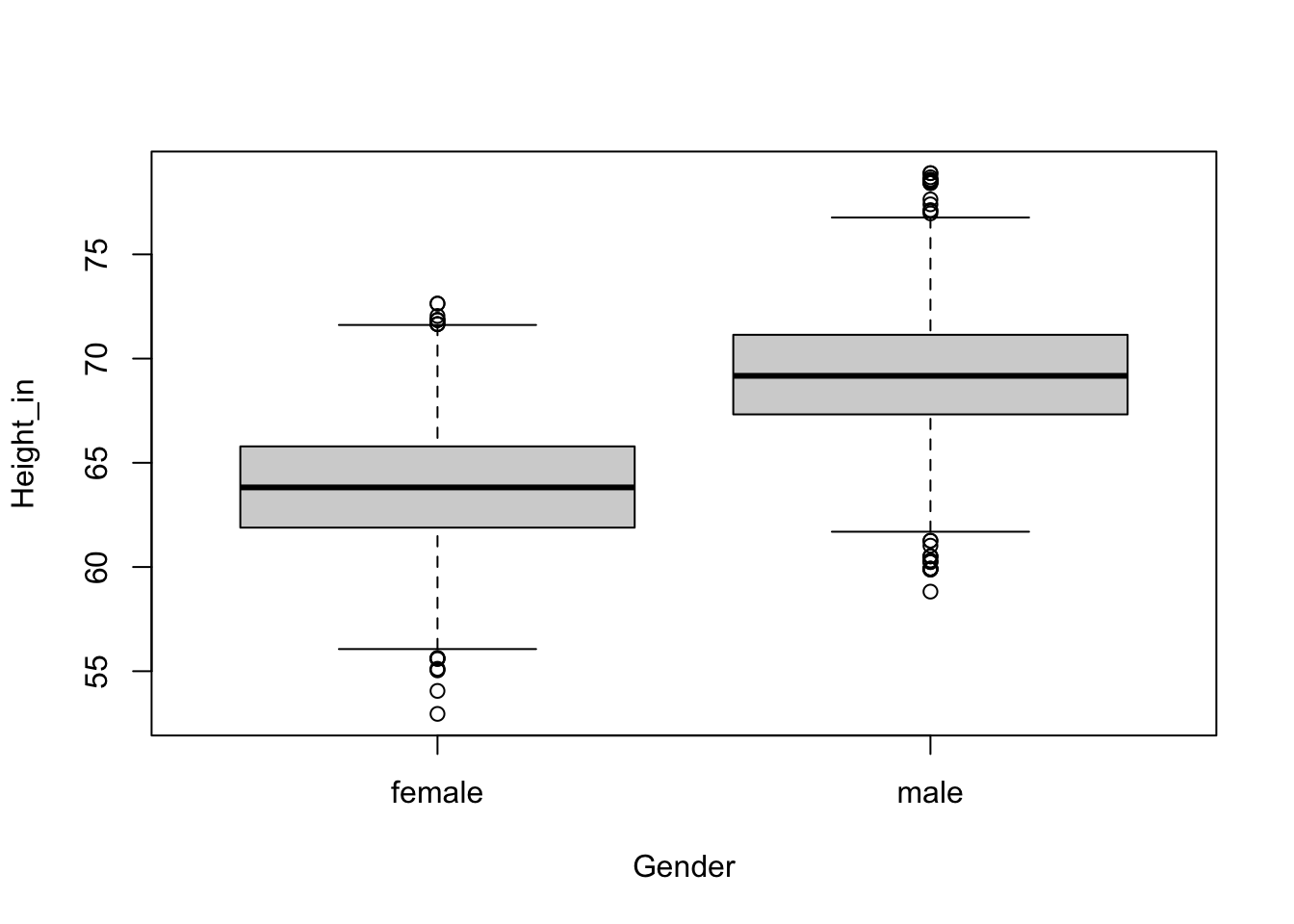

boxplot(Height_in ~ Gender, nhanesAdult)

We do see that the median height of males is greater than that of females. The following code gives us the sample means for each gender category.

aggregate(Height_in ~ Gender, data = NHANES, FUN = mean) Gender Height_in

1 female 61.65979

2 male 65.82336To review what we learned about statistical inference first we will consider our three scenarios.

5.3.1 Exploratory Study

Recall that if we have no idea about what to expect between variables in this data then we are engaging in an exploratory study which includes looking for relations between height and gender.

The appropriate comparisons to make are graphics, means, confidence intervals, and effect sizes of the two group’s heights (Fife & Rodgers, 2022).

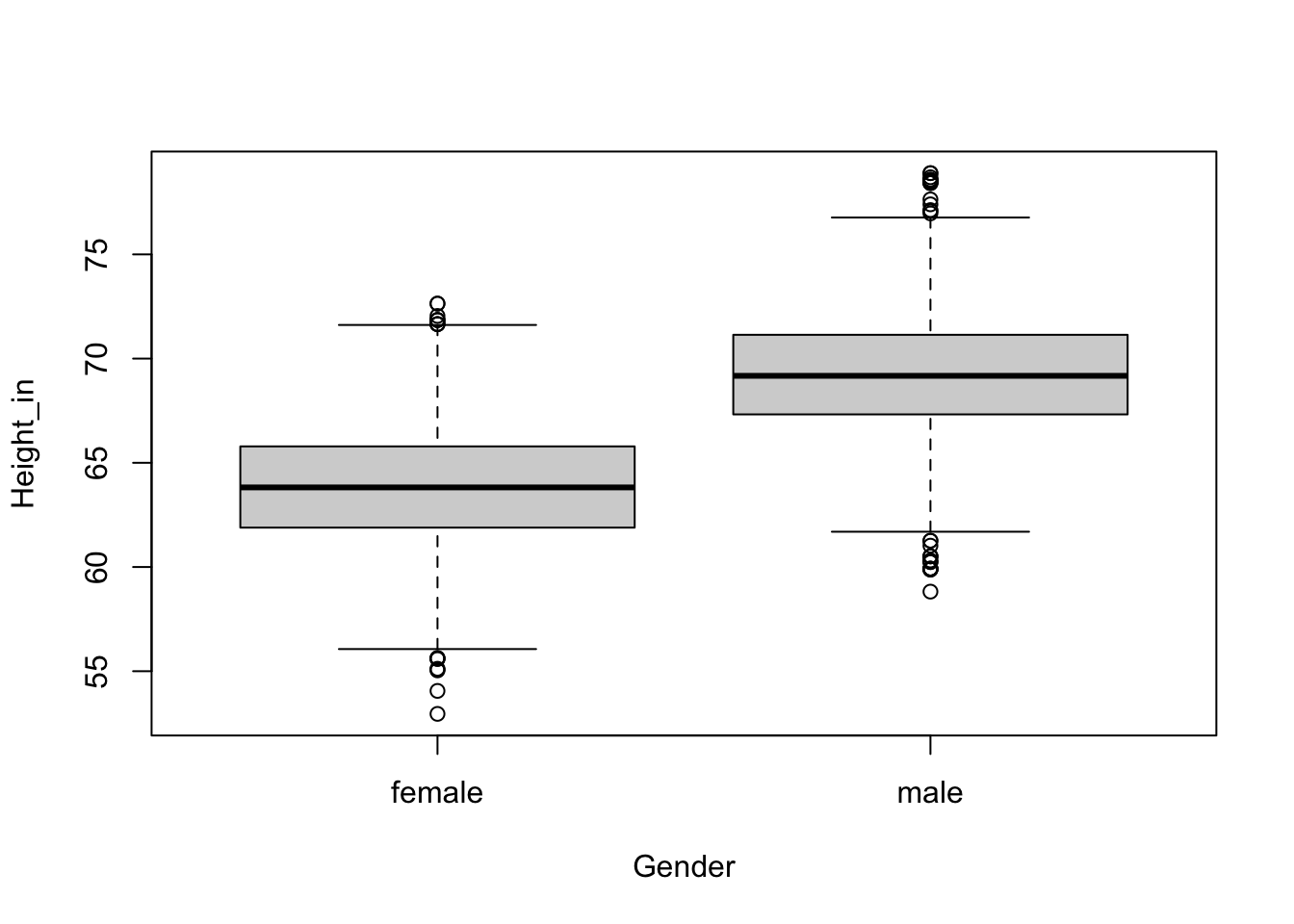

ht.gender_model <- lm(Height_in ~ Gender, data =nhanesAdult)

boxplot(Height_in ~ Gender, data = nhanesAdult)

summary(ht.gender_model)

Call:

lm(formula = Height_in ~ Gender, data = nhanesAdult)

Residuals:

Min 1Q Median 3Q Max

-10.8489 -1.9176 -0.0221 1.9070 9.6572

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 63.80166 0.04733 1348.00 <2e-16 ***

Gendermale 5.43876 0.06743 80.66 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 2.905 on 7422 degrees of freedom

(57 observations deleted due to missingness)

Multiple R-squared: 0.4671, Adjusted R-squared: 0.467

F-statistic: 6506 on 1 and 7422 DF, p-value: < 2.2e-16confint(ht.gender_model) 2.5 % 97.5 %

(Intercept) 63.708878 63.894440

Gendermale 5.306584 5.570939cohens_d(Height_in ~ Gender, data =nhanesAdult)Warning: Missing values detected. NAs dropped.Cohen's d | 95% CI

--------------------------

-1.87 | [-1.93, -1.82]

- Estimated using pooled SD.It is very important to note that a probabilistic interpretation is NOT appropriate for the p values. The p-value of less than .001 for the gender coefficient in the linear model output, should not be used as evidence against the null hypothesis. It would also be very important to report all the tests and comparisons conducted to be transparent about what was done in the study.

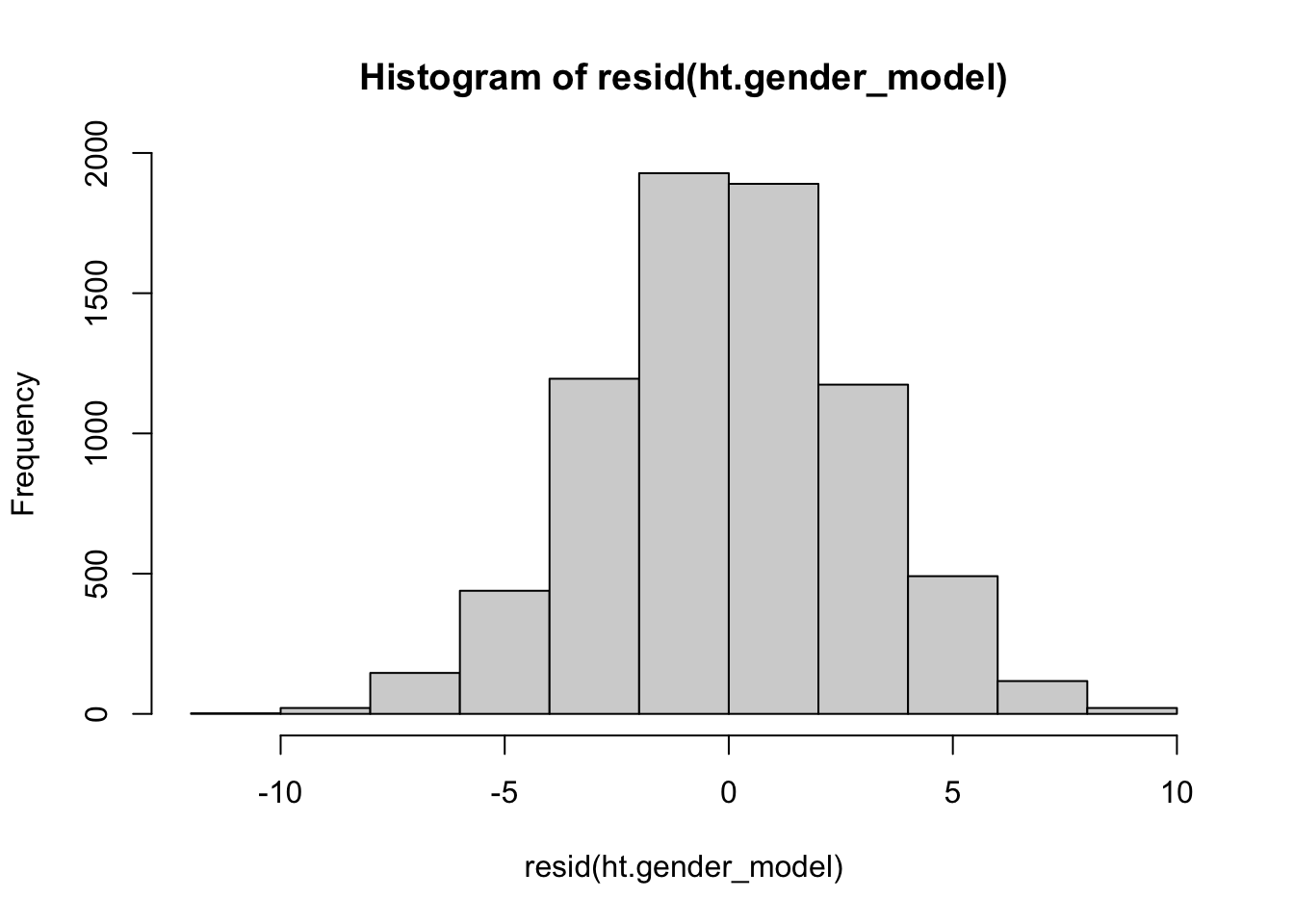

You should also explore the residuals of the model. We will talk about more sophisticated ways to explore residuals later, for now. Such studies should be followed collecting new data to use one of the other types of studies described below.

hist(resid(ht.gender_model))

5.3.2 Rough Confirmatory Study

If originally we suspected that there is a relation between height and gender, possibly among other relations of interest, and we want to know if heights are different between the populations of adult women and men, this study could be considered a rough confirmatory study.

In that situation it would also be appropriate to use graphs, means, and effect sizes, along with confidence intervals. All of the output we obtained above could be used. The difference is that you may emphasize the confidence intervals of the linear model coefficients

confint(ht.gender_model) 2.5 % 97.5 %

(Intercept) 63.708878 63.894440

Gendermale 5.306584 5.570939Such studies should be followed by strict confirmatory studies if there is support for any of the hypotheses. All analyses and exploratory techniques should be reported transparently.

5.3.3 Strict Confirmatory Study

This study requires that specific hypotheses related to theory are posited before the data are collected. Then those, and only those hypotheses should be tested and interpreted in the manner that follows. Any additional analyses should be labeled as exploratory and interpreted as in the exploratory study section above. This includes adding any sub-group analyses. If you just happen to find another predictor, say a variable you entered as a covariate to reduce model error, that happens to have a small p-value, it is not appropriate to interpret that as evidence against a null hypothesis, because you did not posit that hypothesis. Such an error is known as HARKing, which stands for Hypothesizing After the Results are Known. It’s bad. Really bad.

summary(ht.gender_model)

Call:

lm(formula = Height_in ~ Gender, data = nhanesAdult)

Residuals:

Min 1Q Median 3Q Max

-10.8489 -1.9176 -0.0221 1.9070 9.6572

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 63.80166 0.04733 1348.00 <2e-16 ***

Gendermale 5.43876 0.06743 80.66 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 2.905 on 7422 degrees of freedom

(57 observations deleted due to missingness)

Multiple R-squared: 0.4671, Adjusted R-squared: 0.467

F-statistic: 6506 on 1 and 7422 DF, p-value: < 2.2e-16